Big data is the new oil- unless you know where to drill.

Have you ever attended a data science course and found yourself shocked that there was no mention of the source of the data?

Here is the trick: the majority of data scientists start with Kaggle datasets, which are free to download, explore, and analyze.

Regardless of your level of experience or machine learning architecture, Kaggle provides you access to millions of real-life data sets. We shall step through it step by step in this guide, where we will not only locate the best sample data sets but also analyze and prepare them like a pro.

What Are Kaggle Datasets?

Kaggle datasets are freely available resources of data that the data-science community around the world shares.

They house CSV spreadsheets and JSON files up to machine learning and analytics-powered image and text information.

Imagine that Kaggle is a data library for all people, students, researchers, and AI Developers

Such data sets can be used to sample Python, solve real-life issues, or train machine learning frameworks with structured and unstructured data.

A majority of Kaggle datasets are free to use with a detailed description, tags, and a license, which means that you will always know what the data portrays and how to use it.

Simply put, when you are looking to find ready-to-use data sets to analyze, use Kaggle to begin with.

Why Use Kaggle Datasets for Data Science and Machine Learning?

Since Kaggle transforms data learning into a practical.

That is why millions of data professionals use Kaggle daily:

- Free of charge: There are thousands of public data sets to be casually downloaded without any fee.

- Real-life scenario: The data sets are real business industries, such as finance, health care, marketing, e-commerce, and more.

- Machine learning prepared: There are numerous already labeled data sets that are ideal for supervised learning projects.

- Learning together: You can see, fork, or comment on the analysis of other users to become a better learner.

- Portfolio development: The projects developed on the Kaggle datasets demonstrate to employers that you can work with real data.

It would only take somebody so used to the platform that they can build their first machine learning models on Kaggle to use the platform, discovering finding the survey that over 65% of entry-level data scientists practiced and created their first models on Kaggle, demonstrates that it is not only a platform, but also a learning ecosystem.

In short, Kaggle datasets fill the gap between theory and data science in the real world.

It is there that the learning becomes meaningful in machine learning projects.

Understanding Kaggle Datasets

It is worth knowing what is so valuable about Kaggle datasets before getting down to data analysis or machine learning, and how they are structured.

Kaggle does not merely store data; it has a whole ecosystem where you can find, study, and even publish your own data sets so some other people can learn.

What Types of Kaggle Datasets Are Available?

Kaggle caters to nearly all types of data you might be working with in doing research, analytics, or a machine learning project.

The key categories that you will encounter are the following:

- Tabular Data: Rows and columns in CSV or Excel files, best active when a person is learning proper editing.

- TEXT Data: NLP (Natural Language Processing) Projects Requirement: reviews, articles, or transcript reviews.

- Image Data: Takes Computer Vision to utilize when training computer vision models.

- Audio Data: The files used in the AI audio recognition are audio files that contain speech or other forms of sound.

- Time Series Data: Financial market, weather data, or a log of an IoT sensor.

- BigQuery Datasets: Large, public sets of data that are hosted on the cloud and are suitable for large-scale analytics.

All types are used differently: To clean and visualize sample data sets, to construct predictive machine learning data models.

Common Dataset Categories and Use Cases

Kaggle classifies data in categories to make exploration easier.

Here are some popular ones:

| Category | Example Use Case |

| Health & Medicine | Predict heart disease or analyze patient outcomes. |

| Finance & Economics | Forecast stock prices or detect fraud. |

| Marketing & E-commerce | Analyze sales patterns or customer churn. |

| Education & Research | Study student performance data. |

| Technology & AI | Train models using image or text data. |

| Social Science & Government | Examine census or demographic trends. |

These categories allow you to find data sets to analyze very easily and according to your intentions or level of expertise.

Public vs. Private Datasets

Kaggle datasets are divided into two major groups:

Public Datasets

These can be viewed by anyone, downloaded, and used in projects.

They are ideal to learn, learn how to code, or working on open research.

Private Datasets

The uploader or host of the competition limits these.

This may need access through competition, acceptance of terms, or permission.

The users begin with the public data sets, as the latter are ready and may have sample notebooks and unambiguous licensing.

How Can I Access Kaggle Datasets for Free?

The data on Kaggle is easy to access, and most importantly, free.

Here’s how:

- Sign up at kaggle.com: To do so, one needs to create an account at kaggle.com.

- Visit the Datasets Tab: Thousands of data sets with search options.

- Use Filters: Use Filters to filter data either by file type (csv, json, SQLite), tags, or usability score.

- Preview Before Downloading: Kaggle has an in-built Data Explorer that allows previewing of samples.

- Download: Click on the Download button, or call the Kaggle API on large files.

Any publicly-shared data set can be accessed and downloaded (you can also analyze it)–whether to complete a data science project or whether you just need to create a machine learning model.

Want to get an idea on how to make your data smarter? Discover ways of making your vision come true.

Schedule a 30-minute Call!

How to Find the Best Kaggle Datasets for Your Project?

The identification of the appropriate Kaggle data set will or will not work out your project. Thousands of public data sets are available, and it is not the issue of access, but rather a matter of making a good choice. The idea is to find a dataset to analyse or do machine learning, which is relevant, reliable, and easy to work on.

Dataset Search Filters and Tags

Search filters in Kaggle are your friend whenever you go to analyze high-quality data sets.

You may limit your search according to:

- Tags: Choose such issues as healthcare, education, NLP, or computer vision.

- Types of files: Select the type of file: CSV, JSON, SQLite, or image data.

- Usability Score: Datasets will be scored between 1 and 10 in terms of form and documentation.

- Votes and Views: The issue of high engagement can indicate popularity and trust quality.

- Recent Updates: The more recent the data is updated, the higher the chances that it is correct and up-to-date.

To improve its work, filters should be combined first. As an example, find a fun-to-use dataset with a 10 usability score in CSV. This guarantees a clean and structured sample data set, which will be easy to explore.

Dataset Quality Indicators

Check the quality indicators of any dataset that they are about to download. These are the basic prisons that can save you some hours of cleaning and misunderstandings in the future.

- Completeness: Revise homogeneous values and less the gaps in records.

- Clarity: A clear description, which includes varying explanations, is an indicator of excellent data recording.

- Size and Format: The data should be of a size that will not exceed the capacity of your system.

- Community Feedback: Community Reasons to visit the Feedback on why other users upvoted it.

- Author Reputation: Data of checked or competition authors is mostly more reliable.

Good public data sets assist in concentrating on your issues as opposed to data repair.

Exploring Datasets for Beginners

At Kaggle, work in small steps, particularly when you are new. Use clean and familiar data and learn about the principles of analysis and model development.

There are also some beginner-friendly ones, such as:

- Titan: Machine learning of disaster: Classification and prediction practice.

- Iris Dataset: Simple visualization and classification.

- Netflix Movies Dataset: Best suited to comprehend the relationship between data and recommendation systems.

Such sample datasets are not difficult to learn, and they form a sound learning basis.

Evaluating Dataset Size and Usability

The size is important in consideration of the size of data sets to be analyzed, yet larger is not necessarily a good idea.

The small datasets are very nice to learn and prototyping. The medium-sized datasets enable more efficient feature engineering. Big data requires greater processing capacity and is suitable for advanced applications or tools on the cloud.

One should first verify the usability rating of a particular dataset before downloading. The score of 9 or 10 indicates that the data is properly documented and presented. This saves time spent having to prepare, and it accelerates your analysis.

A Guide to Using Kaggle Datasets Effectively

After you have chosen your dataset, you should use it effectively. Appreciating the way to explore, comprehend, and treat your data will aid you in having the knowledge of the right and valuable data.

Navigating the Kaggle Dataset Interface

Kaggle datasets view pages include exploration tools and information on each database page. Preview of files in the Data Explorer, relating notebooks, and community discussions can be read. Use Metadata to verify such information as file size, format, and frequency of updating. This will make you evaluate the suitability of the dataset for your objectives before downloading it.

Using Dataset Discussions and Metadata

The Kaggle community discussions tend to include useful information on the quality of the data, frequently used mistakes, or methods of cleaning. It is possible to save time and learn about possible issues by reading them beforehand. The metadata section has technical details about the row number, file type, and license. When dealing with machine learning data or large-scale projects, it is obligatory to check these details.

Common Licenses and Ethical Data Usage

All the datasets on Kaggle have a license that specifies how they may be used. Some can be commercialized, others can be used only in research and individual projects.

The most widespread ones are the licenses:

- CC BY 4.0: You are free to reproduce and reuse with attribution.

- CC0: The license pretends to make no restrictions on the use of the work.

- ODC Public License: Typically used in projects of the government and open data.

Use of any dataset publicly or commercially must always be checked first. Data use transparency and ethical behavior a fundamental ideas of the present data science and one of the main aspects of the EEAT (Experience, Expertise, Authority, Trustworthiness) criterion of Google.

Collaborating and Sharing Datasets

At Kaggle, teamwork is encouraged. You may invite other people to work on your data, discuss it with them, or generate your own publicly available data sets. It is also important to publish your cleaned or combined datasets so that you can build credibility in the data community. Teamwork not only increases learning but also assists you in building a professional portfolio that indicates your capability of dealing with real-life data sets.

Through understanding the methods of how to find, analyze, and work ethically with Kaggle datasets, you can prepare the ground for improved data projects. These practices will make sure that you do not spend most of your time searching for data and more time analyzing and creating meaningful machine learning models.

Related: https://exrwebflow.com/role-of-ai-business-solutions/

Step-by-Step Tutorial on Downloading Kaggle Datasets

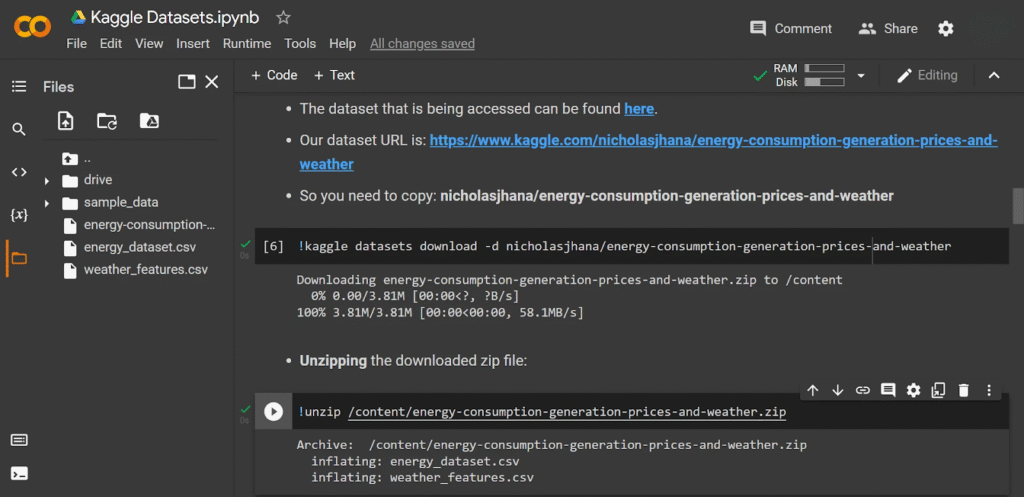

Once you have the idea of how to go about it, downloading Kaggle datasets is easy. You can simply download it on the site or on the Kaggle API to have access to your data sets and manage them in a respective program.

These are the steps to start with.

Creating a Kaggle Account

- Go to kaggle.com and create a free account.

- You have the option to register with your Google or email information.

- As soon as you log in to the freshly created account, you may visit the Datasets section and discover available public data sets that may interest you, which can be health, finance, or machine learning.

Getting Your API Token

Kaggle API gives you a chance to download and handle datasets via your command line.

To use it, you need an API token.

- Tap your profile picture at the upper-right end.

- Go to Account Settings.

- Go to the API and scroll to the top, and then press Create New API Token.

- An auto-downloading file will get downloaded as kaggle.json.

This file is important; save it, and it will hold your authentication information.

Installing the Kaggle CLI

Kaggle Command Line Interface Kaggle Command Line Interface comes to help you communicate with datasets rather quickly.

Install it with:

Next, relocate the file kaggle.json to the hidden folder called .kaggle in your home directory.

For example:

This is the configuration that will enable your computer to have access to Kaggle datasets securely.

Downloading Datasets via Browser and Terminal

There are two methods of downloading datasets:

Option 1: Using the Kaggle Website.

Click on the page of the data set and download.

This is most effective when you have minor sample data sets or play around with them first and then analyze later.

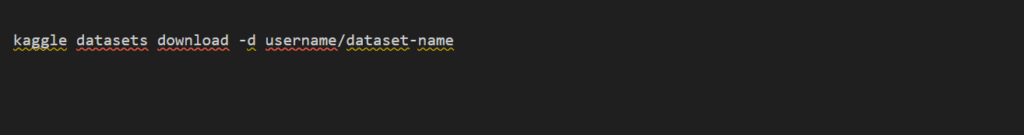

Option 2: Using the Kaggle CLI

To execute it on bigger data or to automate it, the following command is used:

After the downloads, extract the files and analyze them in a tool of your choice.

Using Kaggle Datasets Offline (VS Code Setup)

You can analyze Kaggle data sets offline with Visual Studio Code (VS Code), also, in case you would prefer to work locally.

- Install Visual Studio Code and the Python extension.

- Establish a virtual world for your project.

- Install Py data libraries (pandas and NumPy)

- Create a new dataset on a Jupyter notebook in VS Code.

Example:

It is now possible to explore and visualize your dataset offline in the same way that you would on Kaggle.

How to Clean and Prepare Kaggle Datasets for Analysis?

After the download of your dataset, cleaning and preparation become the next important thing.

Even the most high-quality publicly available data typically has missing, duplicate, or inconsistent data. When your analysis and machine learning models are correct and efficient, this is guaranteed with proper cleaning.

Handling Missing and Duplicate Data

The first thing to do is to verify your data to detect any gaps or duplicates.

You may use very basic Panda functions like:

Eliminate duplication and decide on missing values. You may drop them, substitute them with averages, or simply do some interpolation depending on the circumstances. To understand, gaps in ages in the Titanic dataset can be rated with the mean or median.

A 2023 Google Cloud Survey determined that close to 80 percent of the time that the data scientist spends on the job goes to waste cleaning and preparing the data. This is not an option; it is a must.

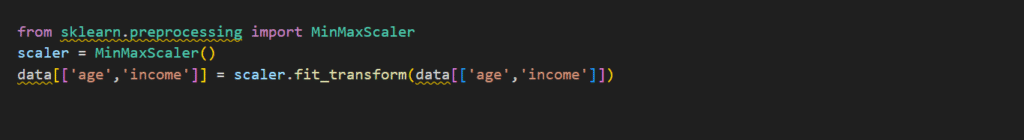

Data Formatting and Normalization

Formatting is used to maintain consistency between the columns and values. Normalize the dates in any standard form, capitalize the rest to lowercase, and also normalize the numerical figures. Normalization scales your numerical data so that you do not simply place the model under the control of features that have big data.

Example:

When it is formatted properly, it is easier to process and interpret your machine learning data.

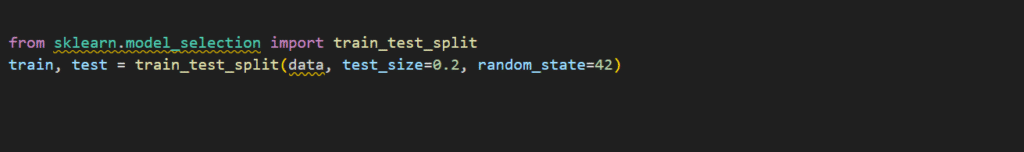

Splitting and Labeling Data

Any model requires separation into training and testing sets before it is trained.

This can be used to test the performance of your model using unknown data.

In case you are solving learning tasks that are supervised, make sure that your target problem (label) is transparent. To give an example, the label in a spam detection dataset would be the column that is contained in “is_spam”.

Preprocessing for Machine Learning

Preprocessing exploits raw data into a model form.

This can involve the encoding of categorical variables, the elimination of irrelevant columns, and outliers.

Example techniques include:

- Label Evaluation: Number in categorized codes.

- Feature Engineering: Invent new interpretative columns using the old ones.

- Outlier Removal: Zero extreme values, which can affect the model predictions, are removed.

The key to a successful machine learning project is clean, obtained, and well-structured data sets to work with.

With these steps, you can have a ragged Kaggle dataset rebreak into clean machine learning data that can undergo predictive modeling.

Learn about how EXRWebflow is transforming innovation with AI and the future of changing data and making your development-driven strategy a reality.

How to Analyze Kaggle Datasets for Data Science?

A real skill of analyzing Kaggle datasets is learning.

After cleaning and shaping your data to shape it, the second step is its exploration, visualization, and modeling.

These processes assist you in discerning patterns, relationships, and cornerstones suppressed within your data sets.

Using Pandas and NumPy for Data Exploration

Pandas and NumPy are two of the most common Python libraries where analysis is required.

They assist you in working, filter as well, and summarize your public information groups effectively.

Here is a quick workflow:

Pandas performs well in exploring tabular data, whereas NumPy is capable of implementing quick and array calculations and operations.

Combining the two, you can easily find missing data, draw up correlations, and determine the character of your data.

Performing EDA (Exploratory Data Analysis)

Exploratory Data Analysis (EDA) represents one of the most significant steps of the Kaggle datasets work.

It aids you in discovering a structure and narrative of your data.

The common steps in EDA are:

- Checking distributions, summary statistics.

- Establishing variable correlation.

- Identification of anomalies and abnormal trends.

- Combining and bundling data to gain an understanding.

As an illustration, when you are examining a sample set of data on online purchases, you can determine which products sell the most or in what areas they work the best.

Using EDA, synthetic data converts the unstructured information into meaningful patterns upon which the following stages of your analysis are based.

Visualizing Data Insights

Your data can be brought to life through visualization. Charts and graphs will enable you to observe the trends that cannot be indicated by figures.

The most used tools are Matplotlib, Seaborn, and Plotly.

Example:

Correlations can be displayed as histograms, scatter plots, or heat maps.

Visualization is not the process of presentation alone. It is the process of finding connections in your sets of data to study.

In the case of machine learning, these plots can be used to tell which factors influence your target variable most.

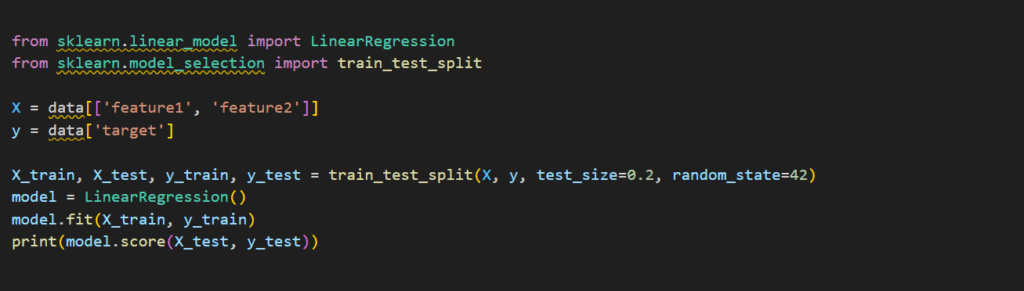

Building Basic Machine Learning Models

After examining and interpreting your data, you are able to begin construction of machine learning models. Kaggle datasets are considered an excellent choice for a beginner to practice prediction, classification, or clustering.

Start simple.

The first model is trained with Scikit-learn, which provides user-friendly model-building tools.

Example:

This is a step that enables you to make machine learning data practical. Identical Linear Regression or Decision Trees are easy to start with, and as you become more comfortable, you can continue with more advanced ones (Random Forests or Neural Networks).

It does not mean that the first thing is to pursue accuracy. It is to see how your features are correlated with your target variable, and the effect that small adjustments in the data would have on the results.

What Are the Top Kaggle Datasets for Data Analysis?

The best datasets on Kaggle have been used by thousands of users; however, there are a handful that have emerged as community favorites due to their cleanliness, high-quality documentation, and are ideally suited to both learning and real-world use.

These are the top 5 public datasets that are highly suggested for analysis.

Titanic: Machine Learning from Disaster

Probably the best-known dataset on Kaggle. The sample dataset will educate you about how to estimate the outcome of survival based on the details of every passenger, including age and gender, and ticket class. It is tiny, neat, and perfect in the case of newcomers to study classification issues.

House Prices: Advanced Regression Techniques

This data is one level higher than the Titanic data, and it teaches you regression modeling. You will be forecasting house prices depending on the housing features such as area, location, and room count. It is among the superior datasets to work on to practice feature engineering and model optimization.

Netflix Shows Dataset

This data includes details on Netflix TV shows and movies. You will be able to examine the trends by genre, nation, or the year of release. It is ideal to practice data visualization and content analysis aspects.

COVID-19 Open Data

It is a publicly available dataset that contains worldwide numbers of COVID-19 cases, vaccinations, and mobility. It is good to use when analyzing time series, making forecasts, and conducting studies of public health. Due to its size and diversity, it also educates you on the ways of handling large volumes of data.

ImageNet Sample Dataset

ImageNet, as a dataset, is a set of labeled images with the purpose of the training and testing of computer vision models. It, even a small subset, can help you get to know about image classification and deep learning fundamentals. It is an excellent dataset when one is interested in machine learning data, not in the form of text or tabular data.

All of these Kaggle datasets are used to learn a new skill instead of cleaning and visualizing data or performing more sophisticated models of machine learning. Their popularity in tutorials, in competition, and in practice makes them popular in applications.

Starting here with these sample data sets, you will have an immediate feel of the most common patterns, formats, and pitfalls that you will discover with real data project work.

Suggested: https://exrwebflow.com/ai-automation-services/

How Do I Evaluate Kaggle Datasets for Quality?

Not all Kaggle datasets are credible. Quality checks before analysis help in saving time and provide accuracy.

Dataset Ratings and Community Upvotes

Consider the usability rating and voting by the community of the dataset. With high upvotes, the information might be clean, useful, and is also tested by other users. The opportunities for problems or solutions are also discerned through reading remarks.

Data Completeness and Format Consistency

An excellent dataset is one with few missing variables and rows. On the day before downloading, preview one of them, so that they get labelled and formatted properly. Consistency is an important element of trustworthy machine learning data.

Author Reputation and Update Frequency

Select the profile of the creator and the date of the latest update. Data sets that are verified with high quality are often the ones maintained by verified authors or competition hosts. Frequent revolutions demonstrate continued topicality.

Real-World Relevance and Documentation

Always read the description. An underlined dataset illustrates the meaning of every column and the manner in which it came. Select patterns of data analysis to examine that are realistic.

Why Use Kaggle Datasets for Machine Learning?

Kaggle brings machine learning practice to life, making it affordable, authentic, and free.

Real-World Practice with Labeled Data

Most open datasets are already labeled, and therefore, you do not need to manually label your models.

Access to Diverse Domains and Formats

Access data on healthcare, financial, marketing, and other topics. You get CSVs, pictures, and text files, all you need when dealing with actual projects.

Learning Through Competitions and Collaboration

In the competitions of Kaggle, you learn to be practical in modeling. The study of shared notebooks of others enables you to know different approaches and techniques.

Building a Public Portfolio

Each project that you add boosts your profile. To demonstrate to employers that you can handle sample data sets and real machine learning data, they want to see your analysis.

Advanced Usage & Collaboration

On top of downloading, Kaggle also allows you to create, share, and participate in managing datasets.

Creating Custom Datasets

Share your personal and/or organizational data sets. Add titles, tags, and licenses to enable them to be searched.

Adding Collaborators to Datasets

We should encourage the teammates to make their contributions or edits. It is best when working in groups or on research.

Updating Datasets via JSON Config

Advanced users use JSON configurations to automate the updating of datasets and have a clean version control.

Using Notebooks with Dataset Collaborators

Data Kaggle notebooks provide several people with the opportunity to analyze the same data in real time so that the workflow remains simple and transparent.

Resources for Starting a Data Project

In case you are in the mood of beginning to begin exploring Kaggle datasets, then a handful of tools and resources can help you learn quicker and smarter.

Kaggle Learn Courses

Kaggle also provides free Python, data cleaning, visualization, and machine learning micro-courses. These lessons include sample data sets and so what you learn is easily applied at the moment.

Sample Data Sets for Practice

Training on datasets that are easy to use, like Titanic, Iris, or house prices. They are small, clean, and ideal to learn how to handle data, visualize, and model the data.

Recommended Tools and Environments

Analyze using Jupyter Notebook, Google Colab, or VS Code. They also work with Python libraries such as Pandas, NumPy, and Scikit-learn, which are the best libraries to use in situations where one has a data set to work with.

GitHub and BigQuery Integration

It is also possible to integrate Kaggle with GitHub to share code or store public data sets. Google BigQuery can be used in large-scale projects and for analyzing huge machine learning data without downloading files, as well.

Have a project in mind? Let’s talk and truly begin to develop the next AI or data-science breakthrough together.

Contact Us!

Conclusion

Kaggle is now the largest site to explore and work with Kaggle datasets, which run real-world data science and AI initiatives. It provides thousands of public data sets, including just-in-time sample data sets and sophisticated machine learning data, so that anyone can practice, analyze, and create predictive models that are easy to use.

However, using high-quality data to explore in order to derive an analysis, such as cleaning and visualization, can also give you practical experience of moving raw information into an actionable insight, particularly through data modeling. Being a student or a professional, Kaggle will offer you all you need to develop your expertise using actual, practical data.

Frequently Asked Questions (FAQs)

How Can I Access Kaggle Datasets for Free?

Go to Kaggle and make a free account, and visit the Datasets section. Any public data is provided free of charge.

Why Use Kaggle Datasets for Machine Learning?

They consist of real-world, labeled data, which can aid you in training on how to build models and real-world data problems.

What Types of Kaggle Datasets Are Available?

The data will include tabular, text, image, and time-series data on topics such as healthcare, finance, and technology industries.

How Do I Evaluate Kaggle Datasets for Quality?

Votes, reputation, completeness, and documentation of the author are checked before one downloads any dataset.

What Are the Top Kaggle Datasets for Data Analysis?

Such popular choices can be Titanic, House Prices, Netflix Shows, COVID-19 Open Data, and the ImageNet sample dataset.