Do you have a problem with data that is spread out in various systems?

It is aggravating that slow, inconsistent, and messy data keeps you in frustration on the way to making smart business decisions.

Showcase how current data integration systems, tested measures of evaluation, and professional best practices can unite all your data, enhance accuracy, and provide real-time information to achieve better outcomes.

What is Data Integration?

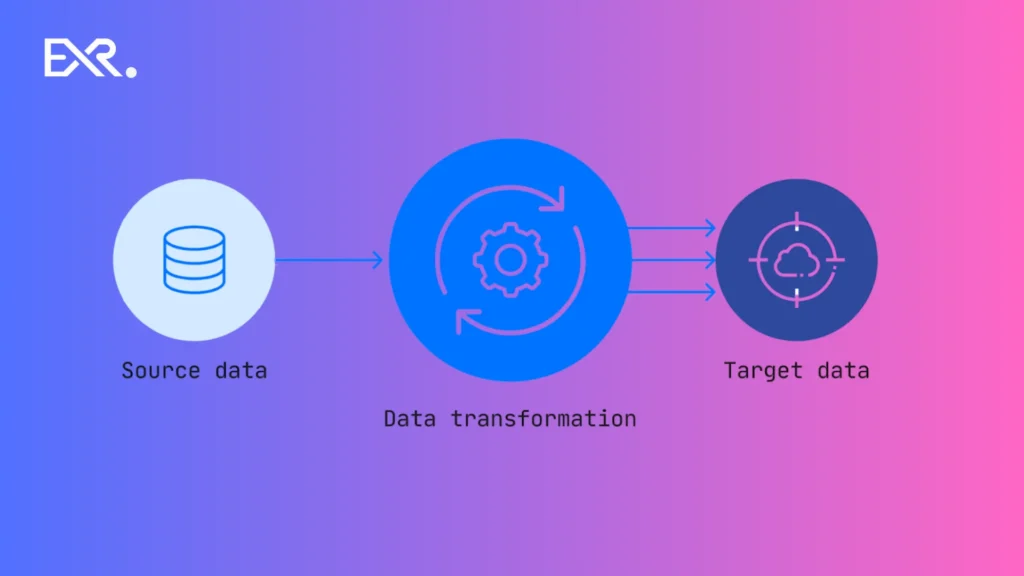

Data integration is the process of integrating data from various sources, such as databases, cloud systems, applications, and spreadsheets,into a unified format. It removes information silos, offers consistency in data, and improves the quality of data.

Current data integration supports business intelligence, analytics, reporting, compliance, and AI-enhanced analysis with real-time insights and faster decision-making. With the help of the relevant tools of data integration, companies can streamline their working process, enhance their operational efficiency, and turn raw data into useful information.

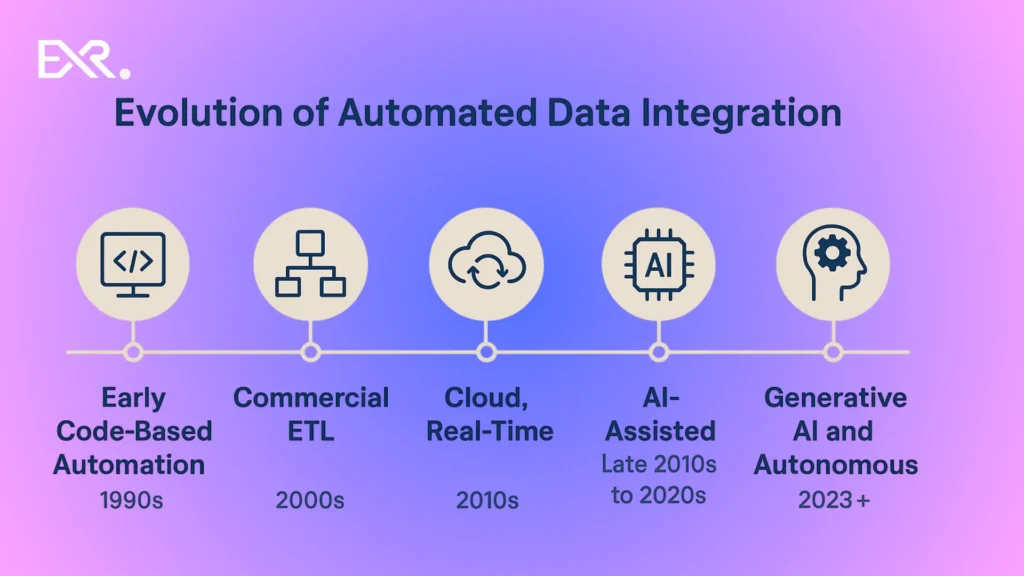

The Data Integration Evolution History Overview

Integration of data over the last several decades has changed dramatically. Starting with simple manual procedures, now there are tools that allow companies to incorporate data provided by various sources and assure quality of data, as well as provide real-time data.

This comprehension of this development aids organizations in selecting the appropriate approach to data integration strategy, enhances business intelligence, and uses analytics to make smarter decisions.

Early Data Integration Manual and File-Based Systems (1980-2000)

Back in the early times, data integration was very manual as it depended on spreadsheets, flat files, and handwritten scripts. The issue that was being encountered by organizations was that of data siloing, inconsistency of data format, and intra-departmental visibility.

Normal was batch processing, slow reporting, human errors, and not being able to get timely insights or assuring quality of data.

The Rise of ETL and Enterprise Integration (2000-2015)

With the increase in the volumes of data, enterprises shifted to ETL (Extract, Transform, Load) tools and enterprise integration platforms.

These solutions automated the extraction and transformation of data and loading of several data sources into central data warehouses, which facilitated improved reporting and business intelligence. Firms can now process bigger volumes of data, enhance data management,t and minimize human error.

Cloud Transformation Era (2015-2020)

Cloud revolution changed the aspect of data integration. IPaaS and cloud-based systems enabled organizations to link databases, SaaS applications, and APIs in real time. This was a period of focus on scalability, flexibility, and cost efficiency, and faster analytics and self-service BI were possible.

Companies would be in a position to consolidate various sources of data without huge expenditure on infrastructure.

Making Data Integration Smart and Automated (2021-2026)

Modern platforms allow the use of AI, machine learning, and automation to simplify complex data pipelines. Some of the features it has are real-time data streaming, metadata control, and predictive data quality, among others, that allow businesses to make faster and smarter decisions.

These solutions lessen the efforts of the manual, enhance data uniformity, and facilitate complex analytics and AI-powered illuminations across various systems as well as cloud settings.

Learn more about Data Lake Vs Data Warehouse

How the Data Integration Process Works

An effective data integration strategy is not merely a data-moving process but a data-combining, cleaning, and managing process, which will result in trusted information for your business. Starting with source identification to provide security, every stage is necessary to provide proper analytics, business intelligence, and real-time insights.

Identifying the Right Data Sources

The initial move is to get to the location of your data- databases, cloud-based applications, SaaS applications, IoT devices, or spreadsheets. As an example, an e-commerce firm can extract customer information from Salesforce, inventory information from SAP, and web analytics from Google Analytics. The correct source identification will put your integration effort on high-value information that will generate business decisions.

Extracting and Loading Data

After the identification of the sources, a central system,such as a data warehouse or a data lake, loads the data. As an example, the retail company can automatically pull data about sales and inventory daily and load it into Snowflake (with the use of Fivetran or Talend). The step makes data available to be processed without interfering with source systems.

Mapping and Transforming Data

Raw data are usually in forms of different formats. Mapping and transformation standardize it to enable its analysis. As an illustration, a merger of the US and EU databases of customers may need to convert currencies, standardize date formats, as well as standardized product categories. These transformations are processed with the help of such tools as Informatica or Matillion.

Validating Data and Ensuring Quality

Once transformed, the data should be verified against accuracy, completeness, and uniformity. In the case of a financial firm whose records have been integrated to include the records of a transaction, it will ensure that there are no lost or two transactions. Trusted data is used to support reporting and analytics, and automated information quality tools such as Ataccama can ensure that the data remains trusted.

Data Synchronization between Systems

Data is dynamic and, therefore, through synchronization, all systems are updated with the current information. To take an example, in case a customer modifies their email in Salesforce, the update must reflect in the marketing automation tool instantly. In real-time processing or periodic batches of data, Fivetran or AWS Glue keeps data up-to-date.

Manage Governance, Security, and Metadata

Lastly, effective data governance and security offer compliance and control. Metadata management is used to trace the origin of data, its transformation, and accessibility. As an example, healthcare information under the HIPAA regulations has to be encrypted and restricted. Such tools as Collibra or Alation assist in the enforcement of policies, lineage, and compliance.

Turn Your Data Into Actionable Insights with EXRWebflow. Explore Our Data Engineering Services Today!

How to Evaluate a Data Integration Strategy

Assessment of a data integration strategy is crucial in ensuring that your organization is able to integrate, clean, and analyze data efficiently. Through a sound assessment, it is possible to determine appropriate tools, connectors, and processes to align with the business objectives, preserve the data quality, and promote analytics, business intelligence, and AI-based insights.

Why Data Integration Evaluation Is Critical

Lack of evaluation exposes organizations to inefficient working processes, poor quality of data, and a lack of insight. As an example, a retailer that incorporates sales and inventory information without adequate controls can experience a shortage of stock or inaccurate reporting.

Strategy evaluation will make sure that the ETL/ELT tools, connectors, and cloud platforms chosen will meet the business requirements, regulatory requirements, and performance requirements.

Performance Measures That Count When Measuring Data Integration

Effective evaluation is based on good measures of effectiveness:

- Data Accuracy and Completeness: The data must have all the necessary fields filled and in the right way. Salesforce customer records should be equal to billing systems, for example.

- Performance and Scalability: Tests the speed with which pipelines can handle increasing amounts of data. Applications such as Matillion or Fivetran can be scaled without much effort to high volumes.

- Reliability and Uptime: Checks to see whether integration pipelines have even loads, i.e., they do not crash at some point.

- Security and Regulatory Compliance: Measures encryption, access controls, and compliance with GDPR, HIPAA, or industry standards.

- Cost and Operational Efficiency: Optimizes platform costs, maintenance, and ROI, assists teams in selecting tools with inexpensive budgets without causing quality issues.

Real-Time vs Batch Integration: The Right Choice

The usage of real-time streaming is dependent on the business requirements that may involve either real-time or batch processing.

- Real-Time Integration: Real-time Integration is useful when decisions are determined by instant updates, such as in an e-commerce or financial transaction. This is supported by such tools as Fivetran, Kafka, or AWS Kinesis.

- Batch Integration: This can be used to do periodic reporting, such as a weekly sales summary, with software such as Talend or Informatica PowerCenter.

The right method is the one that guarantees performance, cost-effectiveness, and timely goods.

Balancing Cost and Performance

Integration pipelines that are high-performance are not cheap. To assess tools, a balance is to be made:

- Costs of infrastructure (cloud and on-premise)

- Maintenance work (manual ETL vs automated platform)

- Speed of processing (real time or batch)

As an example, a medium-sized organization may decide to use Matillion on Snowflake as it is an economical and scalable ELT instead of developing its own pipelines.

Hands-on Decision-making Model

With the help of a framework, the organizations can assess and choose the appropriate strategy of integration:

- Identify business objectives and important databases.

- Determine necessary connectors and tools.

- Measure performance, security, and compliance.

- Model costs and ROI.

- Practice, test, and perfect before complete rollout.

This is a built-in methodology that guarantees the pipelines of integrations to satisfy technical and business requirements.

Popular Connectors and Data Sources

It is important to use quality connecting devices in order to have a smooth integration. Examples include:

- Amazon S3: Scalable data lake cloud storage.

- PostgreSQL: Structured data relational database integration.

- SAP: Operational data system integration of enterprise resource planning (ERP).

- Salesforce: Customer relationship management (CRM).

- Google Analytics: The integration of web and app analytics to find marketing insights.

- Tailored Connectors: To support systems or owner proprietary systems not presently supported.

The choice of appropriate connectors will provide a flow of data without any troubles, errors, or analytics.

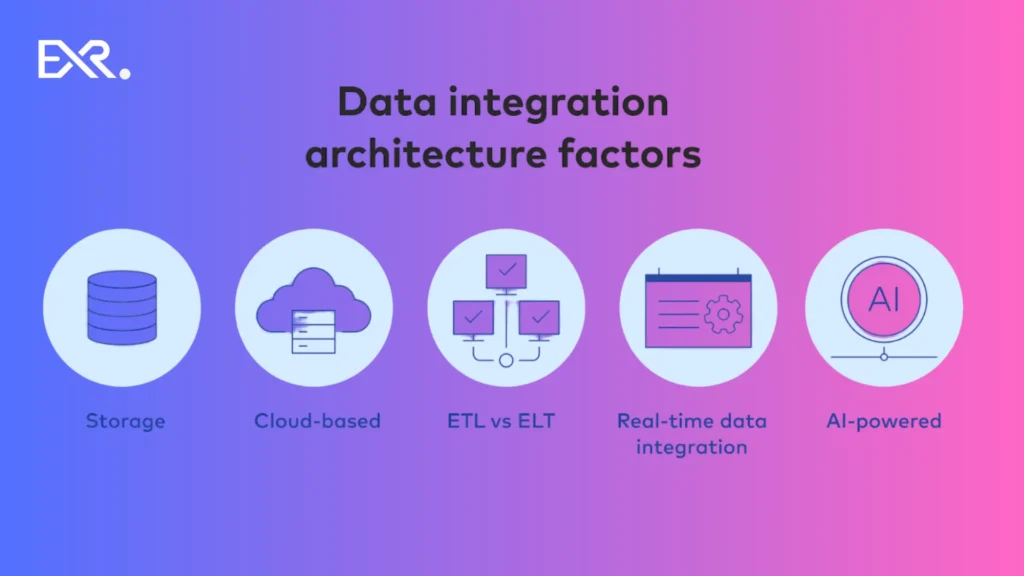

Best Practices for Successful Data Integration Architecture Factors

Best practice will guarantee that your data integration strategy produces accurate and timely insights that are reliable. Implementing organized operations and appropriate tools can assist firms in minimizing errors, enhancing the quality of data, ensuring security and compliance, and achieving lean operations.

Setting Clear Business and Technical Goals

Begin by establishing the goals of your organization in data integration. As an example, a retailer may want to consolidate sales, inventory, and customer information so that it can support real-time business intelligence and predictive analytics. Objective targets assist in the process of selecting tools, choosing connectors, and designing the workflow.

Building Strong Data Governance Foundations

In order to have data that is consistent, accurate, and trusted across systems, data governance policies should be established. This involves the allocation of data to an owner, quality criteria, and quality provenance. Some tools, such as Collibra or Alation, can be used to enforce compliance and data accountability.

Prioritizing Security and Compliance

The data integration deals with sensitive data. Encryption, access controls, and regulatory compliance (e.g., GDPR, HIPAA) are considered the best practices. An example is the need to use patient data in healthcare with high security levels to secure PHI and allow analytics.

Optimizing for Performance

Efficient pipelines decrease latency and enhance real-time knowledge. This involves the application of cloud-native applications, ETL job optimization, and workflow performance monitoring. As an illustration, Matillion or Fivetran has the ability to process extensive loads of data without any analytics slowdown.

Planning for Growth and Scalability

Integration systems need to be scalable as the quantity of data increases without deterioration. Architecture and Modular Scalability Plan: Scalable architecture infrastructure, future-scaled, automated pipelines, and more. As an example, Snowflake or AWS Glue can be used by a SaaS company to expand ETL operations in different regions.

Schedule a 30-Minute Call with EXRWebflow and Discover Smarter Data Solutions.

Key Takeaways

In order to have successful data integration, one would need to possess clear objectives, good governance, effective security, optimum performance, and scalable architecture. With the help of such best practices and suitable tools and connectors, a business will be in a position to turn such fragmented data into actionable information, enable real-time analytics, and make better decisions.

Frequently Asked Questions

What is the concept of data integration, and what is its significance?

Data integration can be defined as the process of connecting information from diverse sources into an adequately coordinated point of view. It eliminates silos, offers data accuracy, and enhances real-time insight, analytics, and better business intelligence.

What is the difference between ETL and ELT?

ETL (Extract, Transform, Load): Before loading data into a target system, it is transformed.

ELT (Extract, Load, Transform): Data is loaded, and then it is transformed within the target system. This option is based on the amount of data, processing rate, and level of integration.

What is the role of APIs in the modern integration of data?

APIs make it possible to communicate in the shape of data in applications and services. They also run faster, are more reliable, and are scaled to have the capacity of supplying a flow of data across systems in real time or in near real time.

What is the way to measure data integration performance?

What is the way to measure data integration performance?

What are the best data integration tools to use in the cloud?

Cloud-based integration tools have to be scalable, automated, and have the ability to connect with multiple data sources. The most frequent ones include cloud ETL, iPaaS, and SaaS applications, and cloud data warehouse compatible connectors.

Which is the most suitable data integration tool in the modern world?

The perfect ETL tool will be organizational in nature. Consider such factors as scalability, automation, security, cost, and the existence of a connector to utilize when choosing an effective solution for your business.